J. Carleton, P. Vijaykumar, D. Saxena, D. Narasimha, S. Shakkottai, A. Akella

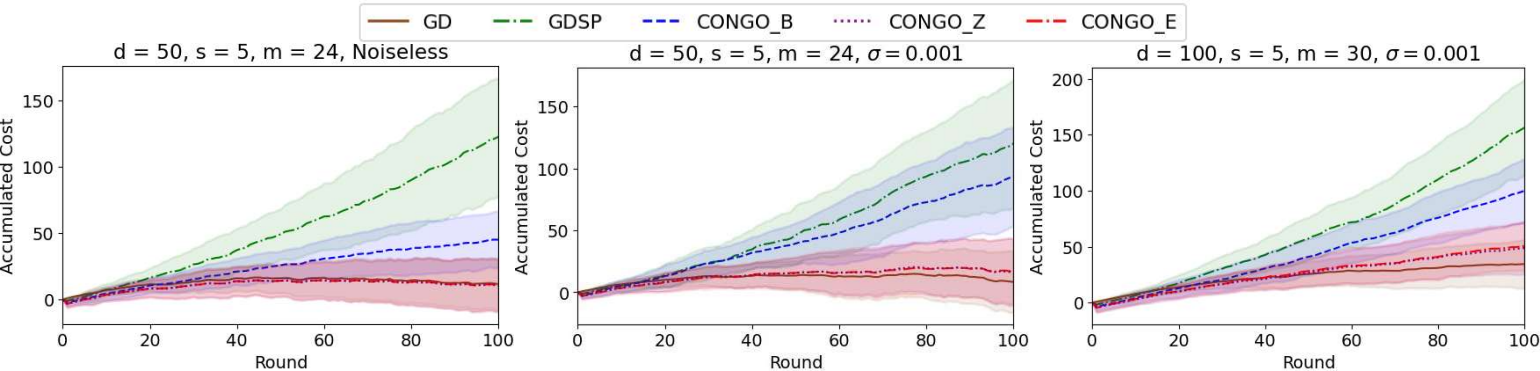

We address the challenge of zeroth-order online convex optimization where the objective function’s gradient exhibits sparsity. Our aim is to …

V. T. Kunde, V. Rajagopalan, C. S. K. Valmeekam, K. Narayanan, S. Shakkottai, D. Kalathil, J.-F. Chamberland

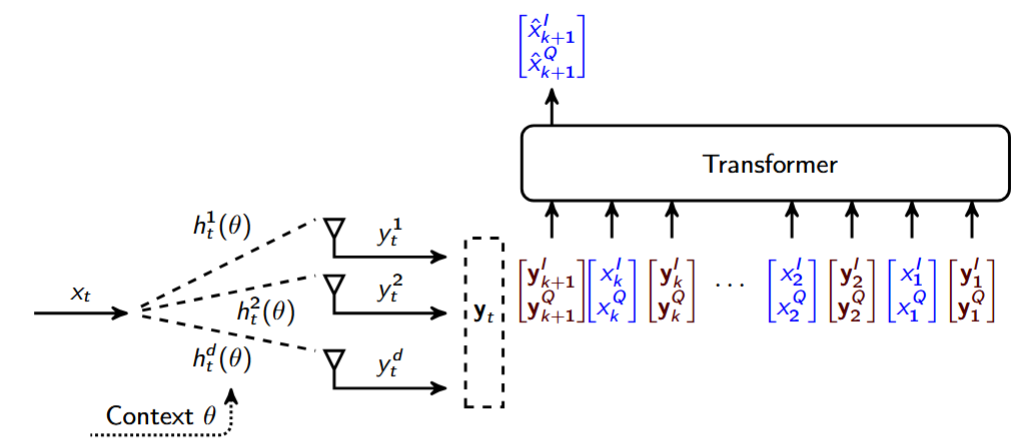

We prove that a single layer softmax attention transformer (SAT) computes the optimal solution of canonical estimation problems in wireless …

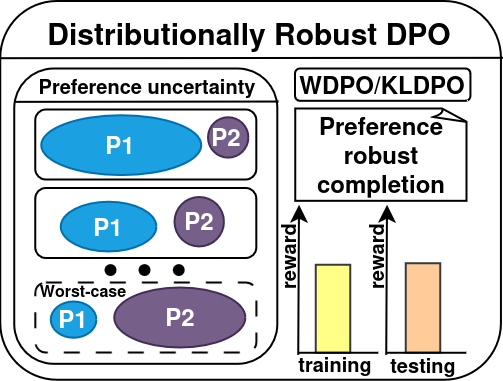

Z. Xu, S. Vemuri, K. Panaganti, D. Kalathil, R. Jain, D. Ramachandran

We propose two novel distributionally robust direct preference optimization (DPO) algorithms, Wasserstein DPO (WDPO) and Kullback-Leibler DPO (KLDPO), …

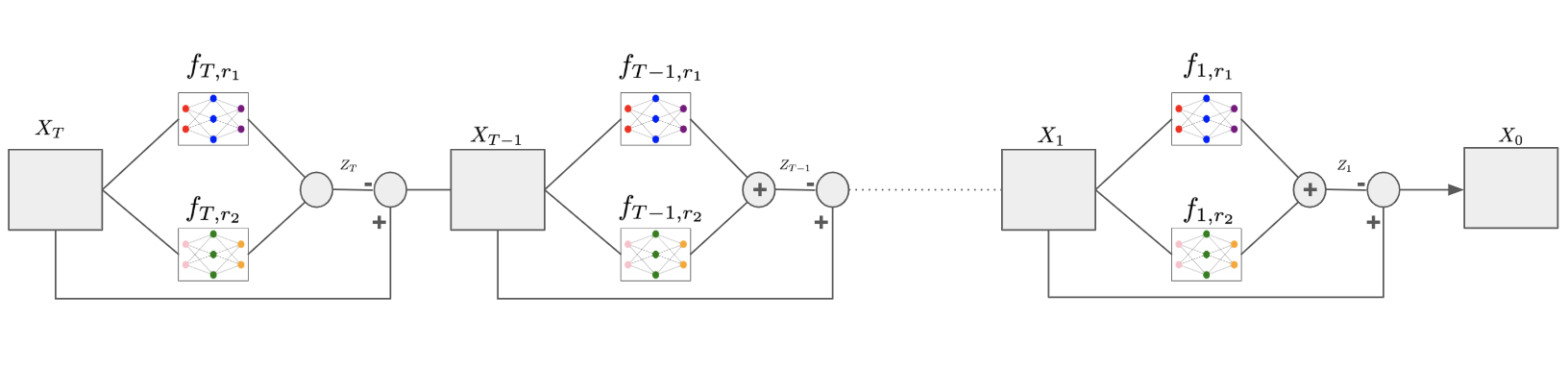

M. Cheng, F. Doudi, D. Kalathil, M. Ghavamzadeh, P. R. Kumar

We propose DiffusionBlend which enables test-time alignment of Diffusion Models to user-defined weights for objectives and regularization without …

C. S. K. Valmeekam, K. Narayanan, D. Kalathil, J.F. Chamberland, S. Shakkottai

We show that English text is a lot more compressible than what was believed to be possible previously. Our lossless compression algorithm combines the …

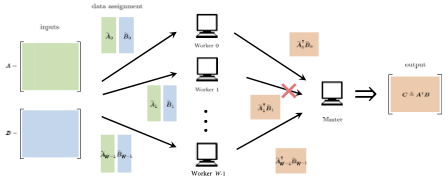

R. Ji, A. K. Pradhan, A. Heidarzadeh, K. Narayanan

In coded distributed matrix multiplication, a master node wishes to compute large-scale matrix product with the help of several worker nodes, we …

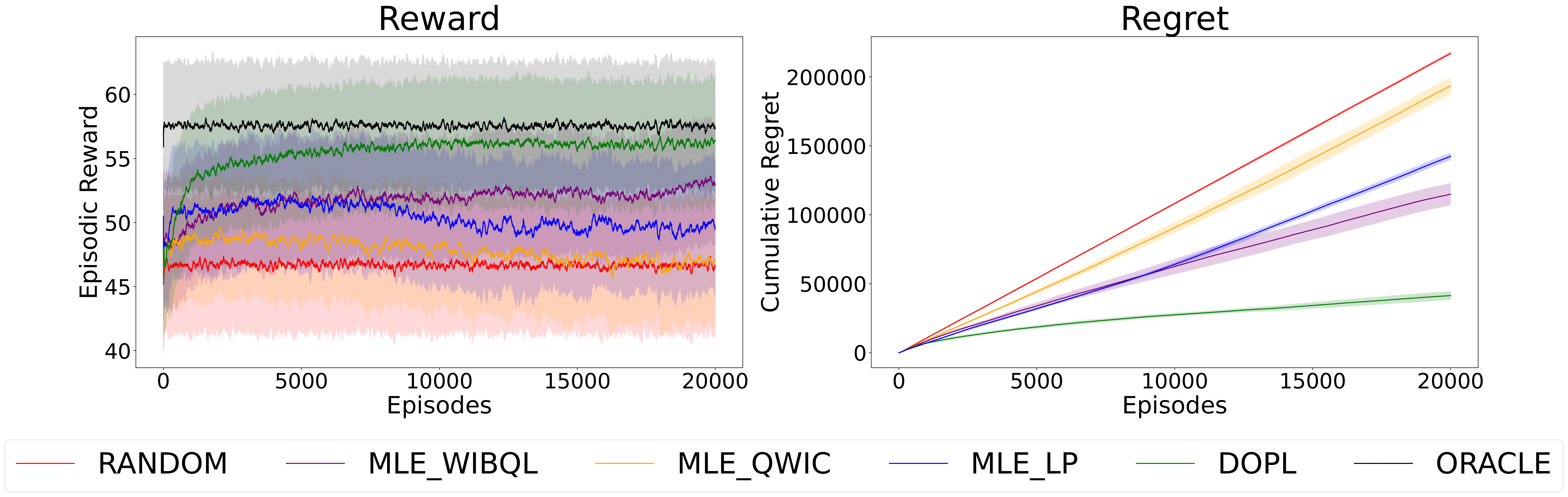

Guojun Xiong, Ujwal Dinesha, Debajoy Mukherjee, Jian Li, Srinivas Shakkottai

Addressing the limitations of conventional scalar rewards, DOPL leverages pairwise preference feedback to navigate restless multi-armed bandit …

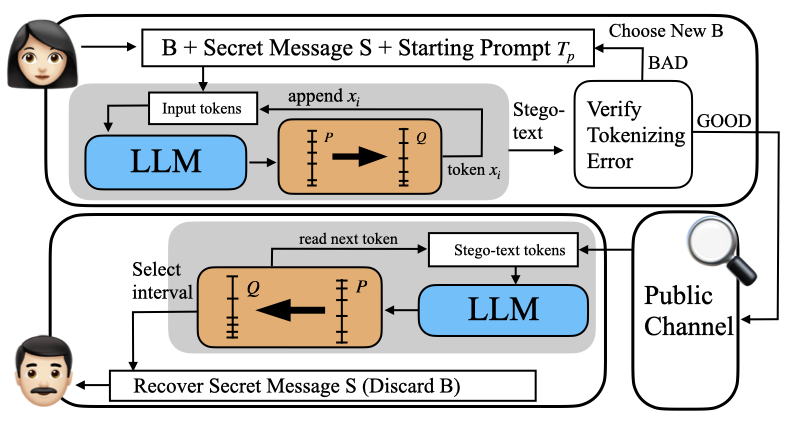

Y.S. Huang, P. Just, K. Narayanan, C. Tian

We explore coverless steganography using a Large Language Model (LLM) to guide an arithmetic coding decoder in generating stego-texts, aiming to embed …

C. S. K. Valmeekam, K. Narayanan, A. Sprintson

We consider a wireless network where each user/sensor wishes to communicate an image to a central base station for the purpose of classifying images …

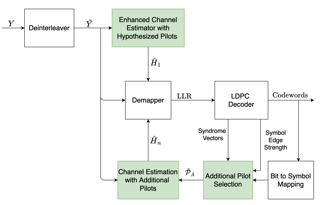

C. S. K. Valmeekam, K. Narayanan

We propose a semi-blind decision-directed joint channel estimation and decoding algorithm for low-density parity check (LDPC) coded Orthogonal …