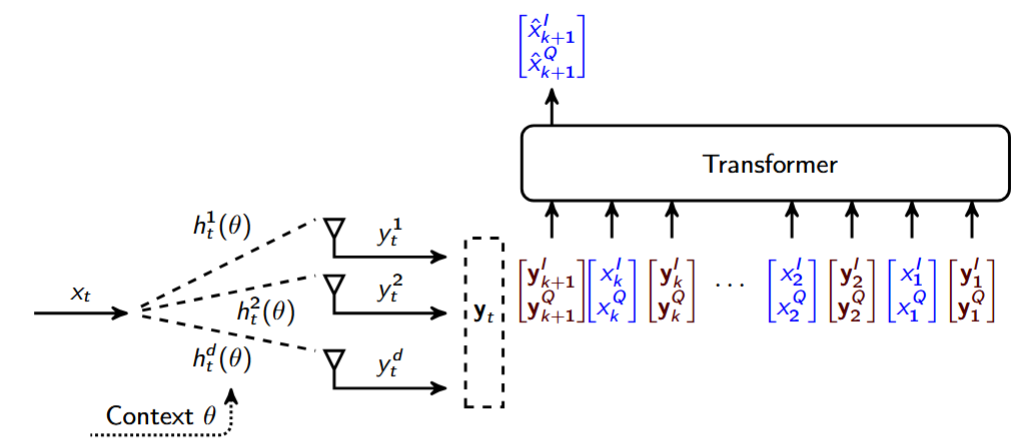

We prove that a single layer softmax attention transformer (SAT) computes the optimal solution of canonical estimation problems in wireless communications in the limit of large prompt length. We also prove that the optimal configuration of such transformer is indeed the minimizer of the corresponding training loss.